Reading with VR and AR (Fieldwork Day 3)

Our fieldwork continued last week, with our third session at one of our local South East Queensland high schools. In this session, some of our older students (grades 9 and 10) were immersed in the VR experience of Titans from Space, in addition to another AR activity using a Merge Cube. These activities provided an opportunity for students to use both VR and AR for reading and comprehension.

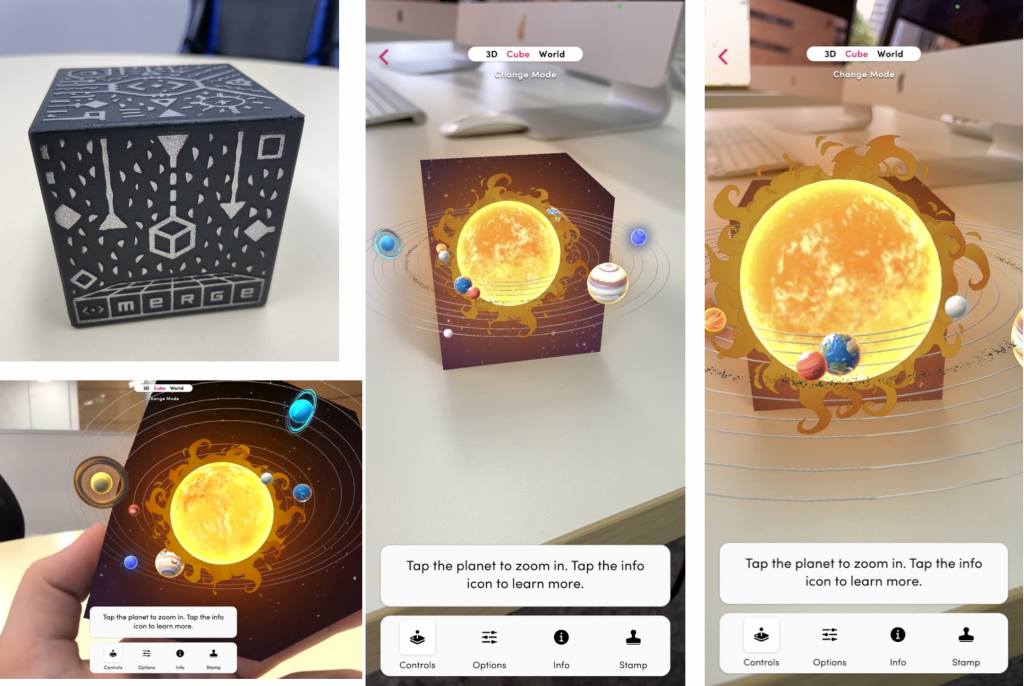

The Merge Cube is a physical foam rubber cube, about the size of a tennis ball, that provides an anchor point for the 3D visuals of the associated app, Explorer. This allows students to ‘hold’ digital 3D objects. The cube provides an innovative way to interact and learn within a digital world. An example of a Merge Cube can be seen below in Figure 1, in addition to a sample of the 3D images from one of the many educational based activities available on the Explorer app.

Figure 1: The physical Merge Cube (top left) and the AR projections. The lower left panel shows a student holding and rotating the cube to view multiple perspectives of the solar system. The solar system is also animated, demonstrating the rotation of the planets around the sun.

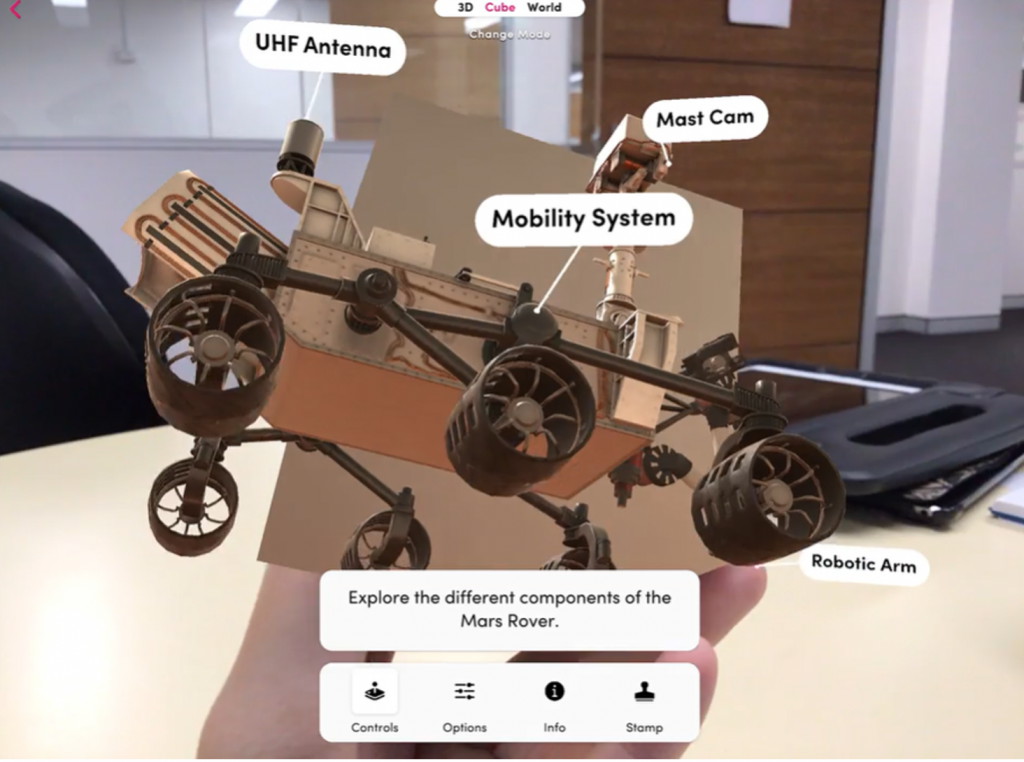

The Merge Cube ‘Explorer’ app provides many education-based activities, ranging from ‘Galactic Explorer’ and a ‘Ticket to Mars’ to learning about frogs, and related reptilian anatomy through a virtual dissection. The example in Figure 2 shows the annotated labels of the Mars Rover, where students can move and rotate the cube to see all sides and perspectives of the rover. Each activity has an overview of information, and then allows students to tap into the activity and read related information projected on the cube. After the students have completed their exploration of the various items, a quiz is available to check for understanding of the content covered in the AR module.

Figure 2: An overview of some of the components of the Mars Rover.

Students can touch, move, rotate the physical cube, and also enlarge or shrink the AR projections on the cube. Such touch and manipulation align with our earlier observations and analysis, including from our work in Makerspaces (Friend & Mills, 2021), and the use of haptics and other sensorial and bodily interactions with digital texts in VR contexts (Mills et al., 2022; Mills & Exley, 2022). Some of this work can be accessed from the reference list below, or via our publications tab on the website.

Mark Williamson, from our partner organisation, Big Picture Industries Inc. joined us again for photography with both DSLRs and iPads. The students identified multiple points of interest in nearby parkland and produced around 60-80 photos each. They then edited and manipulated their images and shared this work with their classmates and teachers.

References and some examples of our earlier work

Friend, L. & Mills, K. (2021). Towards a typology of touch in multisensory makerspaces. Learning, Media and Technology, 46(4) 465-482. https://doi.org/10.1080/17439884.2021.1928695

Mills, K. A., & Exley, B. (2022). Sensory literacies: The full sensorium in literacy learning. In R. Tierney, F. Rivi, G. Smith, & K. Ercikan (Eds.), International encyclopedia of education (4th ed., pp. 1–7). Elsevier. https://doi.org/10.1016/B978-0-12-818630-5.07023-8

Mills, K. A., Scholes, L., & Brown, A. (2022). Virtual reality and embodiment in multimodal meaning making. Written Communication, 39(3), 335-369. https://doi.org/10.1177/07410883221083517